Hi all,

Today, I’m gonna give you a quick overview about how to load an ElasticSearch index (and make it searchable !) with data coming from Twitter. That’s pretty easy with the ElasticSearch River ! You must be familiar with ElasticSearch in order to fully understand what is coming. To learn more about the river, please read this.

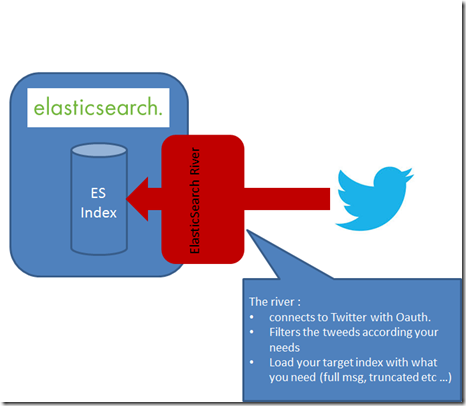

As usual, a quick schema to explain how it works.

Installation

Pretty simple as it comes as plugin. Just type and run (for release 2.0.0) :

- bin/plugin -install elasticsearch/elasticsearch-river-twitter/2.0.0

Then, if all went fine, you should have your plugin installed.

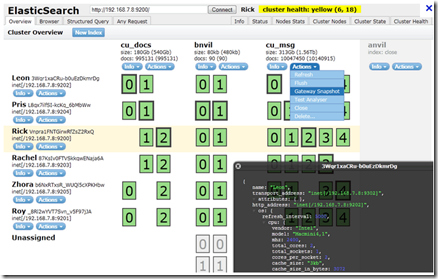

I highly recommend to install another plugin called Head. This plugin will allow you to fully use and manage your ElasticSearch system within a neat and comfortable GUI. Here is a pic :

Register to Twitter

Now you need to be registered on Twitter in order to be able to use the river. Please login to : https://dev.twitter.com/apps/ and folllow the process. The outcome of this process is to obtain four credentials needed to use the Twitter API :

- Consumer key (aka API key),

- Consumer secret (aka API secret),

- Access token,

- Access token secret.

These tokens will be used in the query when creating the river (see below).

A simple river

Ok, here we go. Just imagine I want to load, in near real time and continuously, a new index with all the tweets about …. Ukrain (since today, May 9 2014, the cold war is about to start again …).

That’s pretty simple, just connect to your shell, make sure ElasticSearch is running and that you have all the necessary credentials. Then send the PUT query below. Don’t forget to type your OAuth credentials (mine are under the form ****** below ).

curl -XPUT http://localhost:9200/_river/my_twitter_river/_meta -d '

{

"type": "twitter",

"twitter": {

"oauth": {

"consumer_key": "******************",

"consumer_secret": "**************************************",

"access_token": "**************************************",

"access_token_secret": "********************************"

},

"filter": {

"tracks": "ukrain",

"language": "en"

},

"index": {

"index": "my_twitter_river",

"type": "status",

"bulk_size": 100,

"flush_interval": "5s"

}

}

}

'

After submitting this query, you should see something like :

[2014-05-09 10:58:58,533][INFO ][cluster.metadata ] [Rex Mundi] [_river] creating index, cause [auto(index api)], shards [1]/[1], mappings []

[2014-05-09 10:58:58,868][INFO ][cluster.metadata ] [Rex Mundi] [_river] update_mapping [my_twitter_river] (dynamic)

[2014-05-09 10:58:59,000][INFO ][river.twitter ] [Mogul of the Mystic Mountain] [twitter][my_twitter_river] creating twitter stream river

{"_index":"_river","_type":"my_twitter_river","_id":"_meta","_version":1,"created":true}tor@ubuntu:~/elasticsearch/elasticsearch-1.1.1$ [2014-05-09 10:58:59,111][INFO ][cluster.metadata ] [Rex Mundi] [my_twitter_river] creating index, cause [api], shards [5]/[1], mappings [status]

[2014-05-09 10:58:59,381][INFO ][river.twitter ] [Mogul of the Mystic Mountain] [twitter][my_twitter_river] starting filter twitter stream

[2014-05-09 10:58:59,395][INFO ][twitter4j.TwitterStreamImpl] Establishing connection.

[2014-05-09 10:58:59,796][INFO ][cluster.metadata ] [Rex Mundi] [_river] update_mapping [my_twitter_river] (dynamic)

[2014-05-09 10:59:31,221][INFO ][twitter4j.TwitterStreamImpl] Connection established.

[2014-05-09 10:59:31,221][INFO ][twitter4j.TwitterStreamImpl] Receiving status stream.

A quick explanation about the river creation query :

- A river called “_river” is created

- 2 filters are used :

- track : track the keyword

- language : tweet languages to track

- For filters, directly refer to Twitter API

- An index called “my_twitter_river” is created

- A type (aka a table) called “status” is created

- Tweets will be indexed :

- once a bulk size of them have been accumulated (100, default)

- OR

- everyflush interval period (5 seconds, default)

- once a bulk size of them have been accumulated (100, default)

By now, you should see the data coming into your newly index.

A first query

Now, time to run a simple query about this “Ukrain” related data.

For now, I will only send a basic query based on a keyword, but stay tuned because I will soon demonstrate how to create analytics on these tweets …

Simple query to retrieve tweets having the word “Putin” in it :

curl -XPOST http://localhost:9200/_search -d '{

"query": {

"query_string": {

"query": "Putin",

"fields": [

"text"

]

}

}

}’

… and you’ll have something like :

No comments:

Post a Comment