Hi all,

As promised, an article about a really nice piece of software that will allow you to run data cleansing and data mining jobs with fun.

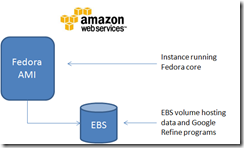

Let’s implement Google Refine in Amazon Web Services (aka “le cloud”).

Google refine ?

According to Google, “Google Refine is a power tool for working with messy data, cleaning it up, transforming it from one format into another, extending it with web services, and linking it to databases like Freebase.”

With Google Refine, it’s easy to load big datafiles and process this data : cell fusion, clustering, groups, adding key-values, transcoding, data modification / data customization with web service calls … Just imagine an Excel grid, but on steroïds.

Let’s go now …

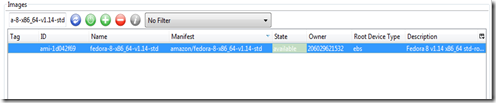

As usual, you first need a valid AWS / EC2 account. Once it’s been done, you need an instance (a server). I recommend using a Fedora Core instance for Google Refine instead of a Ubuntu one. I’m a great fan of Ubuntu and use them in a lot of crucial apps, but I faced many issues with running Google Refine on top of a Ubuntu Lucid AMI (ram usage, freezing, jdk erratic behaviour). Please, choose instead the fedora one : amazon/fedora-8-x86_64-v1.14-std. Of course, let’s go with 64 bits and with an EBS volume attached to the instance in order to provide data consistency over time (everything not located on EBS is ephemeral).

I won’t detail how to create and start an instance on AWS, but here are the majors steps. You can easily go through these steps by using the Firefox pluging called Elastifox. Or, for the guys having muscles, use the AWS EC2 api, which can be donwloaded here.

- Create a key pair and download the private key : this will give you SSH access to your instance.

- Create a security group : create a dedicated security group for your instance and open the following ports : 22 for ssh and 3333 for Google Refine webservice.

- Choose your instance : amazon/fedora-8-x86_64-v1.14-std is a good choice. This corresponds to AMI ami-1d042f69

- Run your instance :

- Be carefull with the availability zone. This one is in USA but you can also place your instance in Ireland or in Asia. Be sure not to put yourself outlaw by placing sensitive data out of your safe harbor.

- Be sure you assign the key pair and the security group you created on steps 1 and 2.

- Choose an instance size. As I have plenty of money, I chose a m2.xlarge instance with plenty of ram.

- SSH into your instance : you can use Putty, but don’t forget to convert the pem key (unix style private key) into ppk (windows style private key).

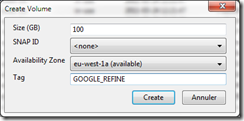

- Create a volume and attach it to your newly running instance. This volume will be used to store Google Refine itself and all the data you will work with.

- You can create a volume by using Elasticfox and attach it to the instance. Below you can find the different prompts. I choose 100 Gigas but you can set it smaller.

- Once the volume is created and attached to the instance, simply create a filesystem on it, using ext3 for instance : sudo mkfs.ext3 /dev/sdf

- Create a mount point in /mnt : sudo mkdir /mnt/refine

- Add mount point in /etc/fstab

- Mount the newly created volume : sudo mount /mnt/refine

- Download and install jdk 1.6

- sudo wget http://cds.sun.com/is-bin/INTERSHOP.enfinity/WFS/CDS-CDS_Developer-Site/en_US/-/USD/VerifyItem-Start/jdk-6u24-linux-x64.bin?BundledLineItemUUID=msmJ_hCxeGwAAAEuarYWpm05&OrderID=uCSJ_hCxvZsAAAEuWrYWpm05&ProductID=oSKJ_hCwOlYAAAEtBcoADqmS&FileName=/jdk-6u24-linux-x64.bin

- Install the jdk : sudo sh jdkxxxxx.bin

- Don’t forget to add jdk_home and path into your bash profile .

- Try your jdk by typing simply typing java in a shell.

- Finally, assign an Elastic IP to your instance. This will be easier to connect to your instance and start using Google Refine. Once again, using ElasticFox will save you a lot of time. (of course you can assign an elastic IP before connecting with SSH, which is more logic I admit …).

- Download and install Google Refine. This is really a no brainer …

- Go into /mnt/refine (that means goind into your EBS).

- Download Refine : sudo wget http://google-refine.googlecode.com/files/google-refine-2.0-r1836.tar.gz

- unzip and untar the archive :

- sudo tar xzf google-refine-2.0-r1836.tar.gz

- Start Google Refine

- sh refine –I 0.0.0.0 –m 8000M

- That means : start refine, listen to all addresses and assign 8 Giga of memory. Hey, that’s what we need here when playing with data !

- Google Refine starts ….

Starting Google Refine at 'http://0.0.0.0:3333/'

10:11:01.905 [refine_server] Starting Server bound to '0.0.0.0:3333' (0ms)

10:11:01.906 [refine_server] Max memory size: 8000M (1ms)

10:11:01.955 [refine_server] Initializing context: '/' from '/mnt/refine/google-refine-2.0/webapp' (49ms)

10:11:03.288 [refine] Starting Google Refine 2.0 [r1836]... (1333ms)

10:11:03.297 [FileProjectManager] Using workspace directory: /root/.local/share/google/refine (9ms)

10:11:03.299 [FileProjectManager] Loading workspace: /root/.local/share/google/refine/workspace.json (2ms)

Time to play now !

Ok, most of the work is done. I hope the quick AWS EC2 walk thru is sufficient for most of you. In the other case, feel free to contact me.

Now let’s play with Google Refine. Simply open your browser and point to the IP address you assigned to your instance : http://your IP Adress:3333/

… and you are done !

Ok, I have some more time, let’s create a simple project. Simply choose a file in the Data File zone, name your project and provide some more informations about your file : separator, header, limit, auto detect value types …

After a short while, booom, your file is loaded and you have access to your data, ready to work on it.

Stay in touch for the next coming articles, I will show you how to fully leverage Google Refine and how to enrich your data with spectacular value added and services.

One last thing, you definitely need to go and read my friend’s blog about datamining. His name is Matthias Oehler (dataminer) and he is a kind of R and Google Refine wizard. You will learn a lot reading his articles as soon as his website will be open (from a few hours to a few days according to him …).

More informations

- You can compile and deploy Google Refine into Google AppEngine, which is even a better solution.

- You can find some screencast about Google Refine here.

Feel free to contact me if needed.

4 comments:

Seems to a one more powerful tool by Google. This time for the database part.

Google will become the leader in yet another upcoming field of cloud computing.

Thanks

________

Roger

Google refine...great tool ever made by google...thanks for the info bro...

Now I just need Google to make a good data cleansing tool..

Post a Comment