Hi all,

I said it, I did it. After coding a SDB bulk loader a few days ago, based on the batch insert feature from the AWS SDK, I coded a bulk extractor.

You said bulk, or Hulk ?

What’s the theory ?

sdb_extractor (its name) was coded with the following features in mind :

- multi query : you can ask multiple SQL orders (selects of course) to be executed and having the data extracted.

- multi domain : you can query multiple domains at the same time, using the same program execution,

- multi threaded : each query has its own thread !

- multi output format : each query generates data that goes into data files. These data files can have different delimiters, different names, different output formats (including json),

- query validation : each query is first tested and only the relevant ones (good syntax and existing data in the domain) will be executed (into its own thread as I said before).

How does it work ?

Simple as usual. A simple program needs a simple usage. Better than a long speech, let’s have a look at the –help command :

As you can see, nothing really complex. You have to provide the program with 2 parameters :

- --aws-credentials : a file containing your aws credentials. Usual syntax from aws (access key and private key),

- -- query-file : a file containing the queries you want to execute and grab the data coming from. Let’s have a close look to this file now.

The control file : the query file.

This file is necessary in order to give instructions to sdb_extractor. Its syntax is very simple, here is the data format (without brackets) :

[SQL query order];[output file];[delimiter];[sdb domain]

… that gives in real life :

select `itemName()`, `Att1` from `your_domain` limit 2000;sdb_extract1.txt;semicolon;sdb.eu-west-1.amazonaws.com

select * from `your_domain_2`;sdb_extract2.txt;pipe;sdb.eu-west-1.amazonaws.com

select * from `your_domain_3`;sdb_extract3.txt;json;sdb.eu-west-1.amazonaws.com

The first query is asking the key (itemName()) and one attribute (Att1), is giving a limit of 2000 (chunks of 2000 lines will be streamed to the data file until no more rows remain), is asking for a txt file output having a semicolon delimiter. This query has to be run upon the EU West SDB domain.

Please note :

- The query file delimiter is : ;

- You can put as many queries as you want

- You can put as many lines as you want

The values for the delimiters are the following :

- semicolon,

- colon,

- pipe,

- json : in that case, a json syntax will be streamed to the export file.

Pretty simple, hum ?

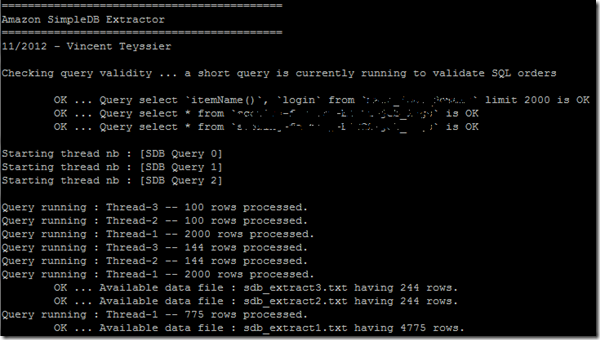

Here is the program output after a full execution. As you can see, the execution is divided into 3 main parts :

- Query validation, showing OK or NOK (here only OK),

- Query running, with a counter. Note iterations with 2000 rows come from the “limit 2000” clause from the SQL query, while iterations with 100 rows are the default from SDB.

- Final output, showing OK for any successfully created file.

What happens when I write a bad SQL order ?

Very simple, the bad query will be trapped early and won’t generate any extraction thread. I managed to print some useful information from AWS. Here is an example of a failed query (I intentionally remove a back tick from the domain name).

I want to try it !

No problem. Just download the package here. Includes the jar and a query file for example.

Performance

As usual, performance is a lot better when running the program from within Amazon EC2. In order to have and additional boost for your extraction, you could for instance create several SQL orders for the same domain/table but using different WHERE clause in order to “partition” your query. It works.

More to come.

I’m currently adding some data streaming to s3 features !! Sounds usefull for backup jobs …

As usual, please, give me feedback either positive or negative. I received a *lot* of feedback from the previous sdb_loader, thanks for that !! Please continue !

540 comments:

«Oldest ‹Older 801 – 540 of 540Post a Comment