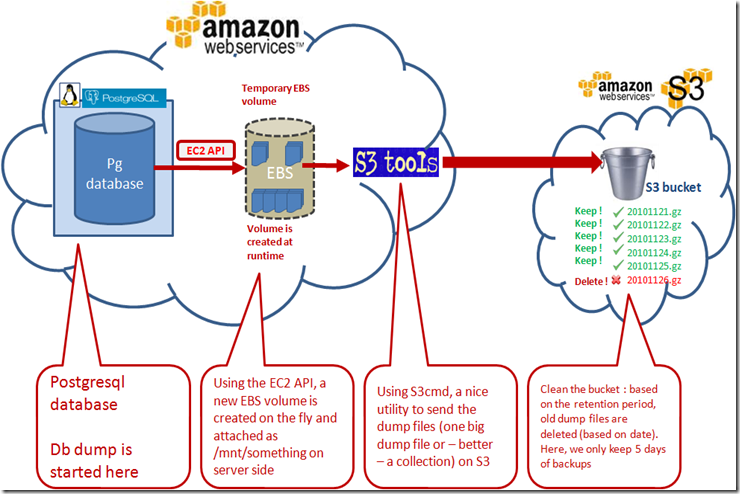

Here you can find a new and customized approach for Postgresql dumps and storage on S3. Based on my previous post, this script uses the EC2 API and has new features and will :

- Create an EBS volume on EC2,

- Prepare the EBS volume filesystem and mount it on you database server (in EC2 cloud),

- Run the postgresql dump utility and store the dumps on the EBS volume,

- Rend the dump files to S3, into the bucket you want,

- Manage dump file collections in the bucket : clean / delete the previous dump files according to the retention date/period,

- Un-mount and delete the EBS volume, like that you no longer pay for something you don’t need / don’t use.

Here is the script descriptions :

- Create the temporary EBS volume,

- VOLUME=`ec2-create-volume --size $VOL_SIZE --region $REGION -z $AVAIL_ZONE | cut -f2`

- Building the filesystem and mounting as /mnt/something

- STATE=`ec2-attach-volume $VOLUME --instance $INSTANCE --device $DEVICE --region $REGION | cut -f5`

- Start database dump

- su - postgres -c "$PG_DUMP $BASE | gzip | split -b $SPLITS - $DUMP_FILE"

- Send the dump files to S3 with s3cmd

- s3cmd -p put $DUMP_FILE* $S3ENDPOINT

- Detaching the EBS Volume

- STATE=`ec2-detach-volume $VOLUME --region $REGION | cut -f5`

- Clean up the historical dump files on S3, based on

- for FILENAME in `$S3PUT ls $S3ENDPOINT | cut -d":" -f3` ====> delete

Below, you can find the script. Please note :

- use database_name = bucket name, for convenience. Otherwise you will have to modify the script.

- use database_name as parameter $1.

- the final routine (lookup on s3 bucket files for cleanup) is a modified version of the one found here.

- Be sure you have a working installation for the EC2 API (+path)

- Variables to check :

- Java path

- Availability zone (EC2)

- Region (EC2)

- Device (something from sdf to sdxxxx)

- Dump dir : will be hosted on the temp EBS once mounted on /mnt/something

- Volume size : the size of the volume to create. Be sure you have enough space for your dumps

- Splits : size for multi dump files.

#!/bin/bash

######################################################

# #

# POSTGRES startup script #

# Author : Vincent Teyssier #

# Date : 20/11/2010 #

# #

#####################################################

#

# General variables

export JAVA_HOME="/mnt/postgres/jdk1.6.0_21"

export EC2_HOME="/mnt/postgres/ec2-api-tools-1.3-53907"

export EC2_PRIVATE_KEY="/mnt/postgres/ec2-api-tools-1.3-53907/keys/pk-file.pem"

export EC2_CERT="/mnt/postgres/ec2-api-tools-1.3-53907/keys/cert-file.pem"

export JDK_HOME="${JAVA_HOME}"

export PATH="${JAVA_HOME}/bin:${PATH}"

export PATH="$PATH:$EC2_HOME/bin"

# EC2 Variables

AVAIL_ZONE="eu-west-1a"

REGION="eu-west-1"

INSTANCE="your EC2 instance id"

DEVICE="/dev/sdh"

DUMP_DIR="/mnt/postgres/dumps"

VOL_SIZE="100" # in mb

# Dump variables

PG_DUMP="/usr/lib/postgresql/8.4/bin/pg_dump"

S3PUT="/mnt/postgres/s3tools/s3cmd-1.0.0-rc1/s3cmd"

BASE=$1

SPLITS="70m"

DUMP_TIME=`date +"%Y%m%d"`

DUMP_FILE="$DUMP_DIR/$DUMP_TIME.gz"

S3ENDPOINT="s3://postgresql-dumps/pilotroi/$BASE/"

echo "***********************************************"

echo "* DUMP/ BACKUP PROCESS *"

echo "* Starting at :" `date`

echo "***********************************************"

# Create volume

echo ""

echo "Create Volume"

VOLUME=`ec2-create-volume --size $VOL_SIZE --region $REGION -z $AVAIL_ZONE | cut -f2`

while ! ec2-describe-volumes $VOLUME --region $REGION | grep -q available; do sleep 1; done

echo "Created volume " $VOLUME "with size of " $VOL_SIZE " Gb"

# Attaching volume

echo "***********************************************"

echo "Now attaching volume"

STATE=`ec2-attach-volume $VOLUME --instance $INSTANCE --device $DEVICE --region $REGION | cut -f5`

while ! ec2-describe-volumes $VOLUME --region $REGION | grep -q attached; do sleep 1; done

echo "Volume " $VOLUME "has state : " $STATE

# Building filesystemm and mounting

echo "***********************************************"

echo "Now building filesystem"

while [ ! -e $DEVICE ]; do echo -n .; sleep 1; done

sudo mkfs.ext3 -F $DEVICE

if [ ! -d $DUMP_DIR ]

then

sudo mkdir $DUMP_DIR

fi

sudo mount $DEVICE $DUMP_DIR

sudo chown postgres:postgres $DUMP_DIR

sudo chmod 777 $DUMP_DIR

su - postgres -c "touch file.txt"

echo "Volume " $VOLUME "mounted on " $DUMP_DIR

# Dumping

echo "**********************************************"

echo "Dump started at " `date`

echo "su - postgres -c $PG_DUMP $BASE | gzip | split -b $SPLITS - $DUMP_FILE"

su - postgres -c "$PG_DUMP $BASE | gzip | split -b $SPLITS - $DUMP_FILE"

echo "Dump ended at " `date`

# Sending

echo "**********************************************"

echo "Send to S3 started at " `date`

$S3PUT -p put $DUMP_FILE* $S3ENDPOINT

echo "Send ended at " `date`

#echo "**********************************************"

#echo "Deleting local dump files"

#rm $DUMP_DIR/$BASE.gz*

# Cleaning all

sudo umount $DUMP_DIR

echo "$DUMP_DIR unmounted"

sudo rm -Rf $DUMP_DIR

# Detaching volume

echo "***********************************************"

echo "Now detaching volume"

STATE=`ec2-detach-volume $VOLUME --region $REGION | cut -f5`

while ! ec2-describe-volumes $VOLUME --region $REGION | grep -q available; do sleep 1; done

echo "Volume $VOLUME has now state : $STATE and can be deleted"

ec2-delete-volume $VOLUME --region $REGION

echo "Volume $VOLUME currently deleting"

echo "Database dump is finished at : " `date`

echo "***********************************************"

echo "Cleanup process"

echo "Starting at :" `date`

LIMIT=`date --date="5 day ago" +"%Y%m%d"`

echo $LIMIT

echo `date '+%F %T'` - Timestamp of 5 days ago: $LIMIT

echo `date '+%F %T'` - Getting the list of available backups

TOTAL=0

for FILENAME in `$S3PUT ls $S3ENDPOINT | cut -d":" -f3`; do

if [[ $FILENAME =~ ([0-9]*)\.gz* ]]

then

echo ${BASH_REMATCH[1]}

TIMESTAMP=${BASH_REMATCH[1]}

echo `date '+%F %T'` - Reading metadata of: $FILENAME

echo -e "\tFilename: $FILENAME"

echo -e "\tTimestamp: $TIMESTAMP"

if [[ $TIMESTAMP -le $LIMIT ]]; then

let "TOTAL=TOTAL+1"

echo -e "\tResult: Backup deleted\n"

$S3PUT del "s3:$FILENAME"

else

echo -e "\tResult: Backup keeped\n"

fi

fi

done

echo `date '+%F %T'` - $TOTAL old backups removed

It’s working nicely on all my postgres servers. I’m currently finalizing a custom script for retrieving and re-creating a database back from the dump file collection.

Feel free to contact me for any question.

No comments:

Post a Comment